Understanding the impact of cloud service latency is crucial for optimizing the performance of cloud applications. In this article, we will delve into the various types of latency, the common causes behind it, effective measurement methods, and best practices for reducing latency. By gaining a solid understanding of cloud service latency, you can enhance user experience, boost efficiency, and unlock the full potential of your cloud infrastructure. Let’s explore the realm of Cloud service latency and discover how you can ensure seamless and responsive cloud services.

Understanding Cloud Service Latency

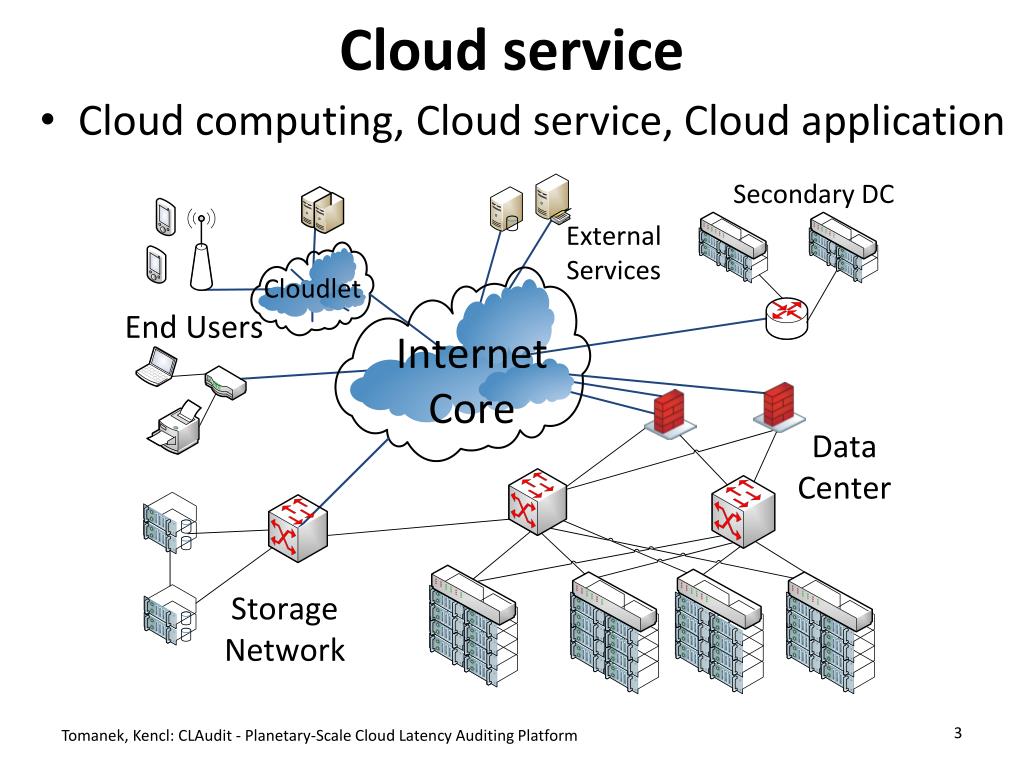

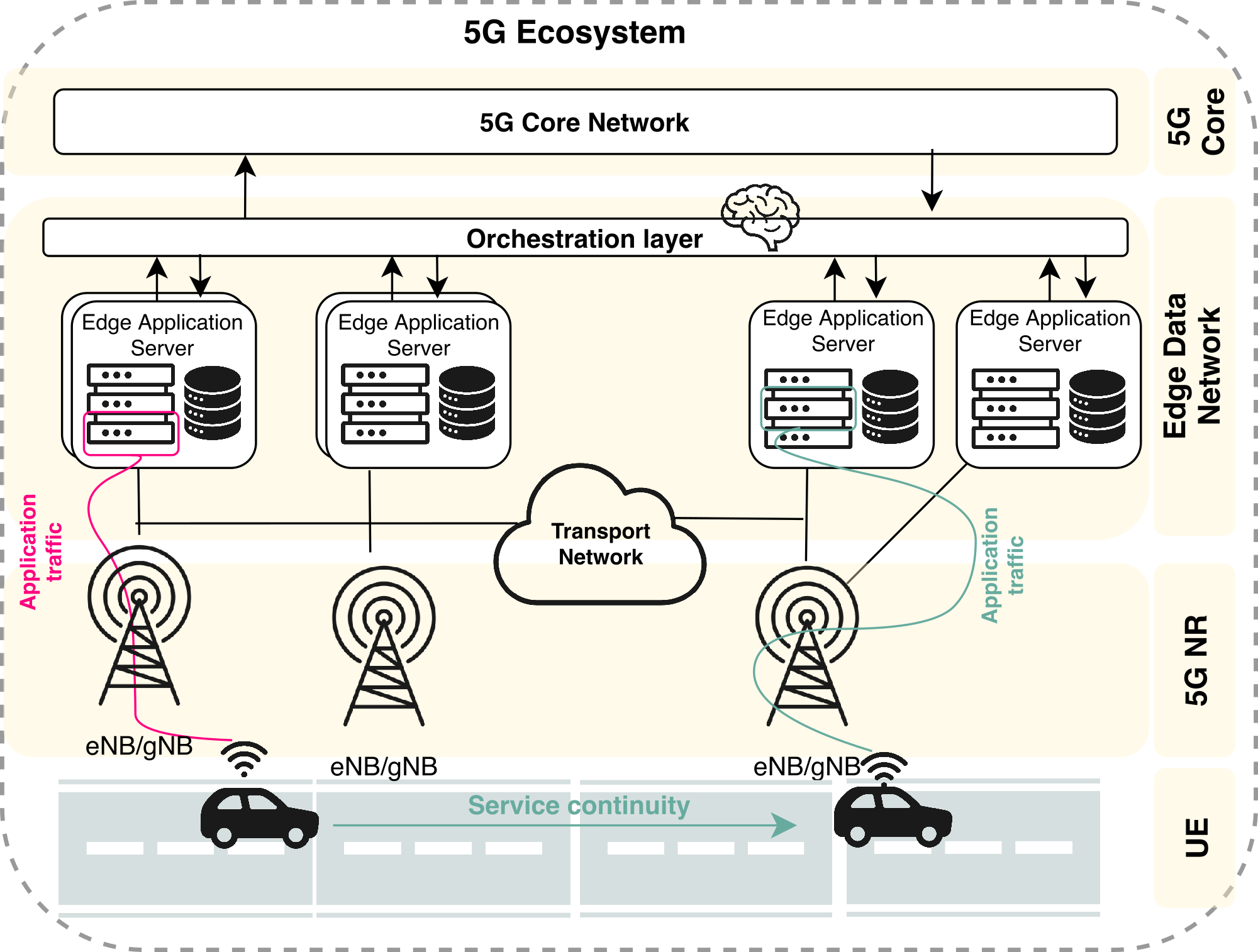

Cloud service latency represents the duration data requires to travel from a user’s device to the cloud service and return. This time lapse significantly impacts the responsiveness and overall user experience of cloud applications. High latency manifests as sluggish loading speeds, connectivity interruptions, and compromised performance. Delving into the intricacies that contribute to latency is vital for refining and enhancing cloud service efficiency.

Types of Cloud Service Latency

Understanding Different Latency Types in Cloud Services

Network latency is the delay incurred as data traverses the network, influenced by factors like bandwidth and packet loss. It impacts data transmission speed and user experience.

Server latency reflects the processing time of the server in handling incoming requests. Server performance optimization is critical to mitigate delays.

Application latency signifies the time taken by the software to produce a response. Efficient coding practices and application architecture enhance responsiveness.

End-to-end latency encompasses all stages of data flow, from user request to final response. Minimizing each component’s latency contributes to overall performance improvement.

Causes of High Cloud Service Latency

Understanding the Key Causes

The distance between the user and server plays a crucial role in cloud service latency. As the physical distance increases, data transfer takes longer, impacting latency. Optimizing server locations and utilizing content delivery networks (CDNs) can help mitigate this type of latency.

Impact of Network Congestion

Network congestion, caused by heavy traffic flow on the network, can introduce delays in data delivery. This can result in packet loss, retransmissions, and ultimately, higher latency. Implementing Quality of Service (QoS) protocols and efficient network management can alleviate congestion-related latency issues.

Dealing with Server Overload

Server overload, occurring when a server handles more requests than it can efficiently process, leads to increased response times and latency issues. Employing load balancing techniques, scaling resources dynamically, and optimizing server performance can help prevent server overload and reduce latency.

Tackling Slow Application Code

Slow and inefficient application code can significantly impact cloud service latency. Code optimization, efficient algorithms, and proper resource utilization are vital in reducing application latency. Regular code audits, performance tuning, and utilizing caching mechanisms can enhance application responsiveness and lower latency for improved user experience.

In conclusion, addressing the causes of high cloud service latency requires a holistic approach encompassing network optimization, server management, and efficient application development practices. By proactively managing distance, network congestion, server load, and application code efficiency, organizations can enhance cloud service performance and deliver a seamless user experience.

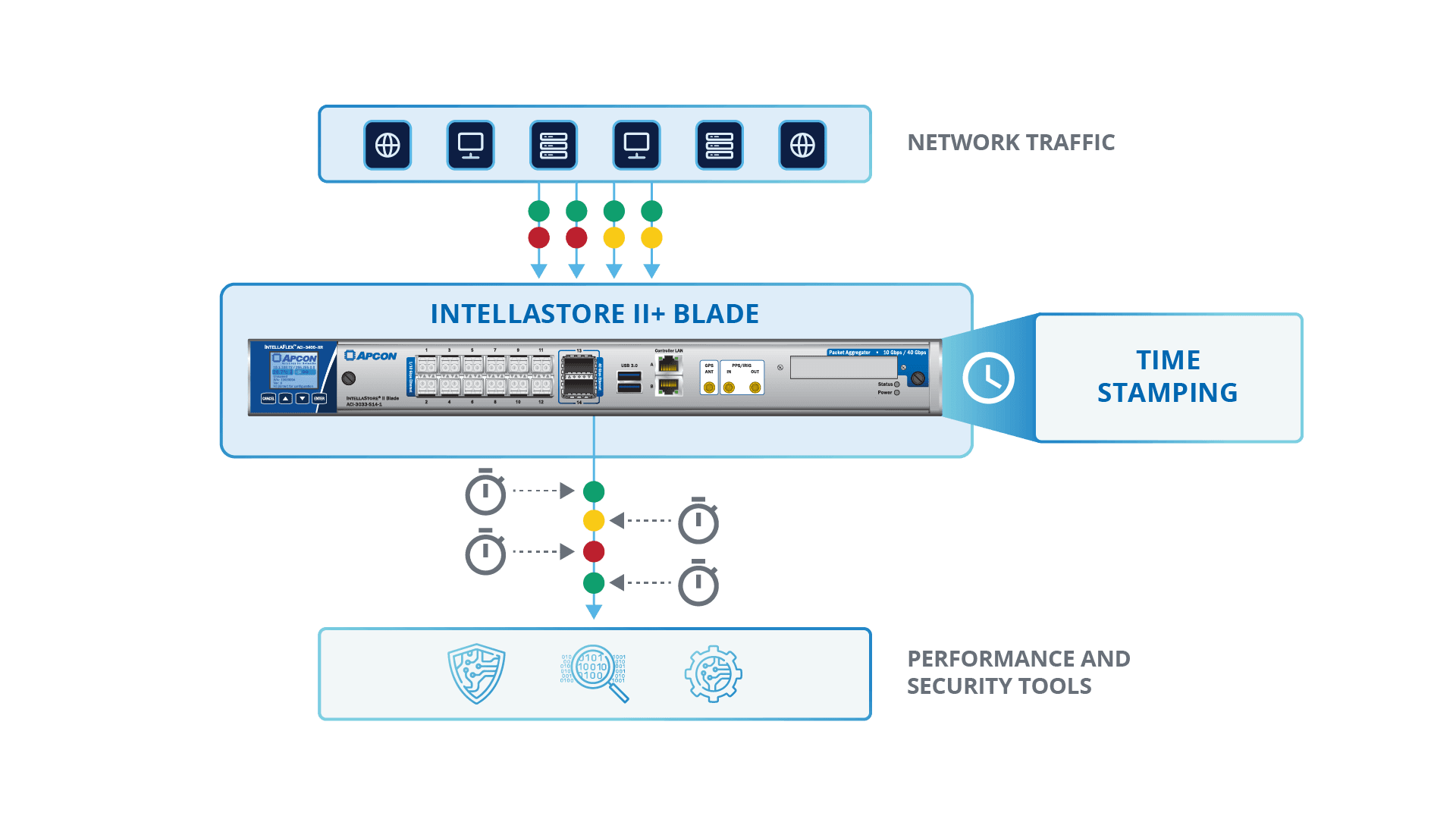

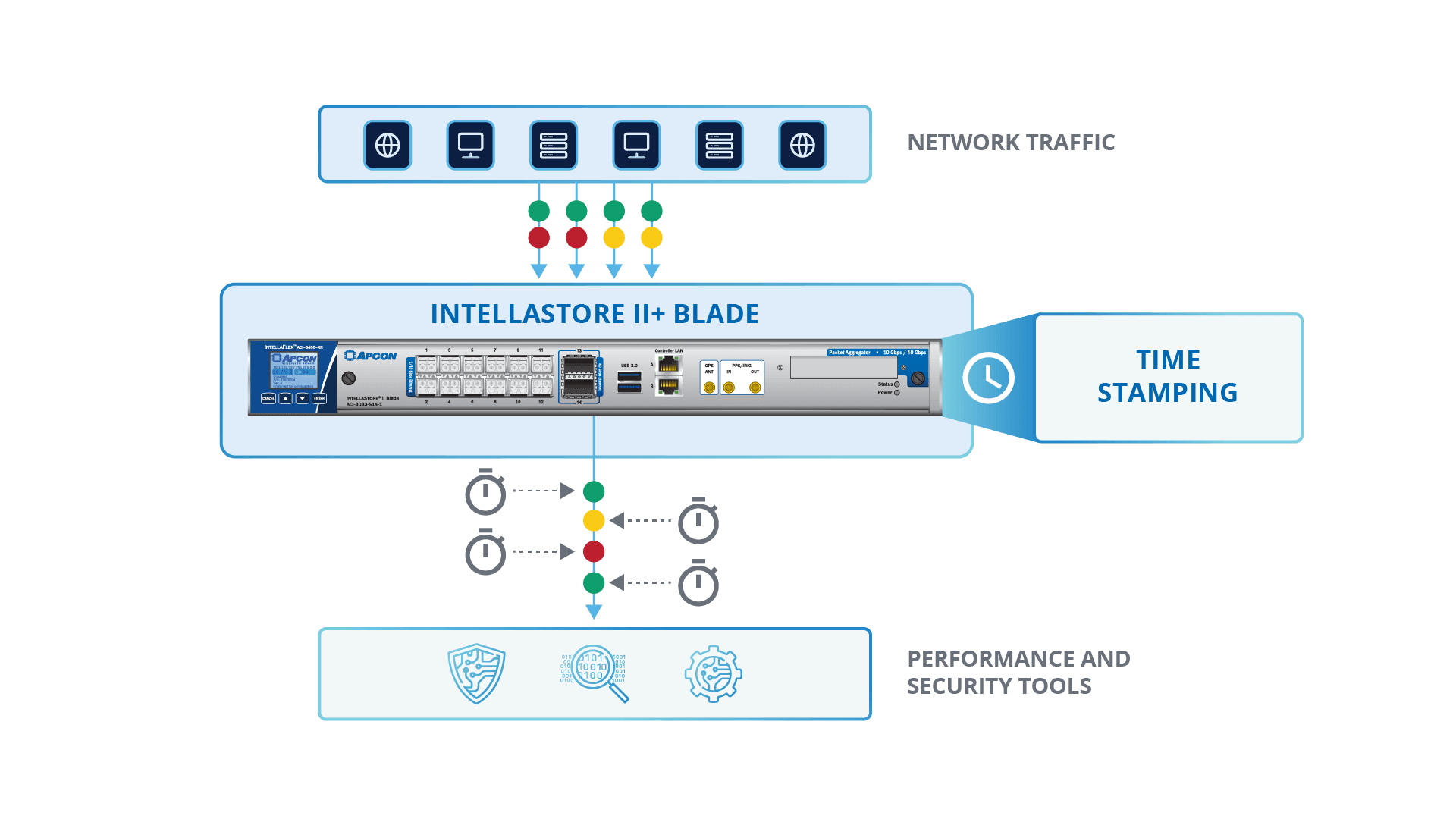

Measuring Cloud Service Latency

Various Techniques to Measure Latency

Ping tests play a fundamental role in measuring cloud service latency by sending ICMP packets to gauge network responsiveness. Traceroute is equally essential, as it traces packet paths to unveil potential network bottlenecks affecting latency. Incorporating load testing simulates real-world traffic scenarios, offering insights into end-to-end latency and system performance with a comprehensive viewpoint.

Application Performance Monitoring Tools for Enhanced Insights

Utilizing application performance monitoring tools is pivotal in measuring cloud service latency accurately. By monitoring application response times meticulously, these tools unveil intricate details about application latency trends, aiding in the identification of performance bottlenecks. This approach allows for proactive adjustments to optimize cloud service latency and enhance overall application performance effectively.

Strategies for Reducing Cloud Service Latency

Choosing a Nearby Server Location

Selecting a closer server location to users minimizes data travel distance, decreasing latency. By strategically placing servers geographically, you optimize response times for improved performance.

Optimizing Network Connectivity

Upgrade to high-speed networks and implement solutions to alleviate network congestion. Enhancing network infrastructure boosts data transfer speeds, reducing latency delays.

Scaling the Server Infrastructure

Expand server capacity to accommodate growing traffic demands. Scaling up servers ensures efficient data processing, preventing latency spikes during peak usage.

Optimizing Application Code

Enhance application efficiency by optimizing code for faster execution. Improving code performance reduces latency, enhancing overall application responsiveness and user experience.

Effect of Latency on Cloud Service Performance

Slow Loading Times

High latency significantly impacts cloud service performance by causing slow loading times. When latency is high, cloud applications may feel sluggish and unresponsive, leading to a frustrating user experience. Users expect instant responses, and latency delays can hinder the overall efficiency and usability of cloud services.

Dropped Connections

Excessive latency in cloud services can result in dropped connections as requests may time out due to prolonged latency periods. This can disrupt user interactions with applications, leading to a loss of connectivity and potential data inconsistencies. Dropped connections due to latency issues can negatively impact workflow continuity and data integrity.

Poor User Experience

High latency levels often result in poor user experience, leading to frustration and dissatisfaction among users. Delayed responses and lagging interfaces due to latency issues can diminish user satisfaction and loyalty. Ensuring low latency is crucial for providing a seamless and responsive user experience in cloud services, enhancing overall customer satisfaction.

Reduced Productivity

Sluggish cloud applications due to latency issues can hinder employee productivity and efficiency. Employees rely on responsive cloud services to perform their tasks efficiently. High latency causing delays in data retrieval or application response times can disrupt workflow processes, leading to decreased productivity and potential business impact. Optimal latency levels are essential for maintaining high productivity levels in cloud-dependent work environments.

Best Practices for Minimizing Cloud Service Latency

Monitoring Latency Regularly

Regularly monitoring latency metrics is vital in proactively identifying potential issues before they escalate. By keeping a close eye on latency data, IT professionals can pinpoint sources of delays and take steps to address them promptly, ensuring optimal cloud service performance and user satisfaction.

Leveraging Content Delivery Networks (CDN)

Utilizing a content delivery network (CDN) can significantly reduce network latency by caching content closer to end-users. By distributing content across multiple servers strategically placed around the globe, CDNs minimize the distance data needs to travel, enhancing response times and overall user experience.

Optimizing Application Code

Continuous optimization of application code is essential for enhancing cloud service performance. Regularly reviewing and fine-tuning the codebase can streamline operations, reduce latency, and boost efficiency, ultimately resulting in faster response times and improved user satisfaction.

Selecting the Right Cloud Provider

Choosing a cloud provider with a reliable and low-latency network infrastructure is paramount. Evaluating providers based on their network performance, data center locations, and infrastructure reliability can help mitigate latency issues, ensuring seamless cloud service delivery and optimized user experience.

By implementing these best practices, IT professionals and developers can effectively minimize cloud service latency, enhance application performance, and provide users with a seamless and responsive cloud experience.